While chatbots like ChatGPT are truly ground-breaking, they have limitations.

In fact, there are many cases in which chatbots simply cannot return an answer due to the limitations of the LLM structure and dataset. Experts quickly find the limitations of chatbots, but novices to a topic are very likely to get tripped up by the confident tone of chatbot responses and miss its mistakes.

This post illustrates how it's important for students to continue to learn the basics and to practice their skills in spite of the convenience and "just good enough" accuracy of today's chatbots. If students grow too dependent on the chatbots they risk not being able to pick up the ball when the chatbots fail.

The Context: Developing Student Assignments

I'm currently developing active learning activities in my programming classes, using the Virtual Programming Lab system in Moodle (eClass) to deliver them to my students. I've developed a number of exercises, some in Matlab, others in Java, Verilog and Python. Over the Summer of 2023, I'm worked on a set of VPL questions in C, a classic but still commonly used programming language.

C is really "old school", dating back to the 1970s. Because of this, there are plenty of examples for ChatGPT to build its answers upon, so one would assume that the chatbot would know everything there is to know about programming in C. However, the language continues to be developed, new libraries are made and new hardware targets are made every day. And that means that there are contexts for which ChatGPT has no information, or conflicting information, to base its answers upon. Furthermore, there are specialized questions that it simply won't be able to figure out, especially for libraries or targets that are not commonly used.

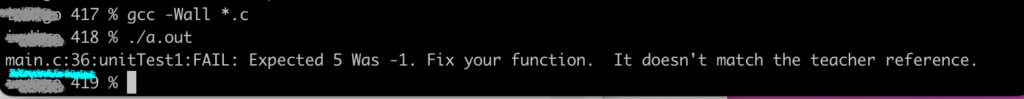

In my case I was developing a type of student assignment using the Throw the Switch Unity "unit testing" framework. Typically, when an error is detected, the unit tester will output the file name where the error occurred, the line number of the unit test, the name of particular unit test and the comparison of the returned value of the function under test and the reference "true" value that it was supposed to be.

I needed to customize the Unity library slightly because when my students see the unit testing result , I don't want my them to see the line number or the file name -- neither will be helpful to my students. But Unity doesn't provide that feature -- which makes sense since a typical user would generally want to know where a program failed, not just how it failed. So, out of the box, here's what the student would see with an unmodified version of the unit tester when the test fails:

Quickly scan the documentation

So, based on this desire to adapt the Unity library, I did what I would typically do: I read the manual.

Well, not quite -- it was more of a quick "flip through" of the manual.

I ran a few key search terms into Google and it returned some results from the Unity documentation and forums. It seemed likely that the answers lay in the following files: unity.c, unity.h or unity_internals.h.

But I couldn't find a specific implementation for my desired program behaviour.

Having been burned a few times when I asked half-baked questions on tech support forums, I didn't want to bother the fine folks on the Unity discussion forum.

So what to do next? More reading? Get out pen and paper and plan out some test programs? Write some arbitrary code and hope for the best?

This is a familiar, uncomfortable juncture -- one that I came to many times when I was a student in the 1990s and have many times since. The advent of chatbots in 2023, like that of good search engines in the 1990s, changes the strategies for finding possible solutions.

It's time to apply my own experience and knowledge to solve the problem.

ChatGPT didn't come to the rescue

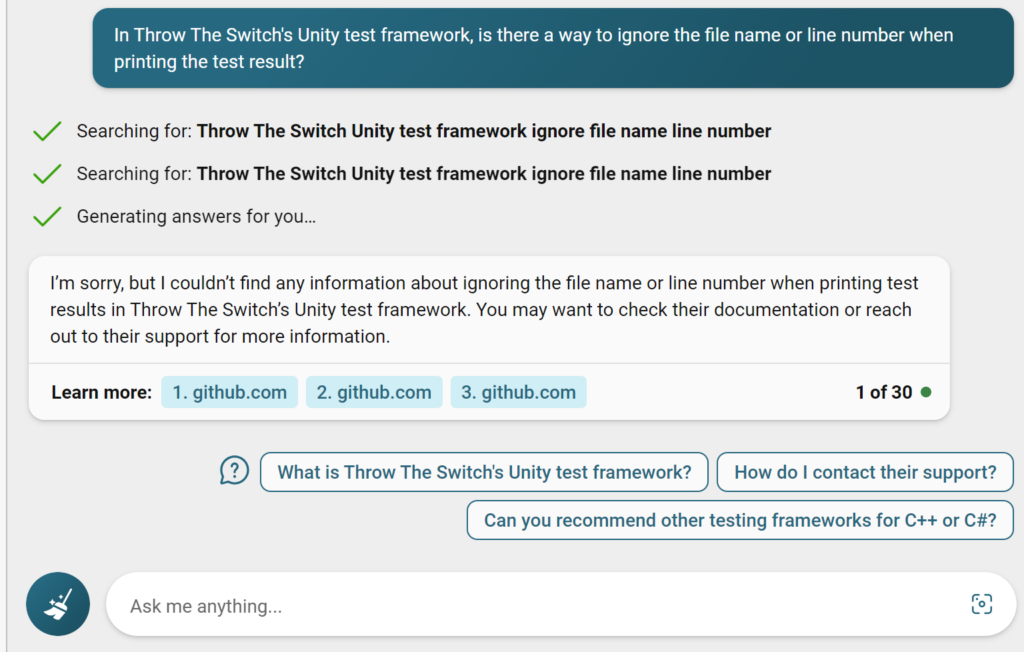

Simple searches weren't turning up a canned answer. But ChatGPT, tucked away in the Bing tab on my Edge browser, offered a convenient way out. I decided to ask it to help solve my problem. I asked it if there was a way to remove non-essential information from the unit tester. Here is what it replied with:

The search engines turned up nothing useful.

The chatbot couldn't figure it out.

Clearly there was no shortcut here. Or at least, to me, there was no obvious shortcut.

Instead, I went back to reading the Unity source code, but this time more deliberately. It was time for me to apply my own knowledge and experience. All those previous hours of working out programming solutions in a variety of applications was going to help me solve the problem.

I focused on the "line" variable in unity.c and found the UnityTestResultsFailBegin() function. This is the function that prints out the file name and line number prior to comparing reference and actual values when a unit test fails. While there is no "pre-made" method for getting rid of the printed file and line number information, I could achieve the desired result by modifying the library. In C, we

- rename the Unity unit test files to

- unity_modified.c from unity.c

- unity_modified.h from unity.h

- unity_internals_modified.h from unity_internals.h

- change references in main.c and unity_modified.h to these new modified headers.

- and then add with pre-processor defines and conditions.

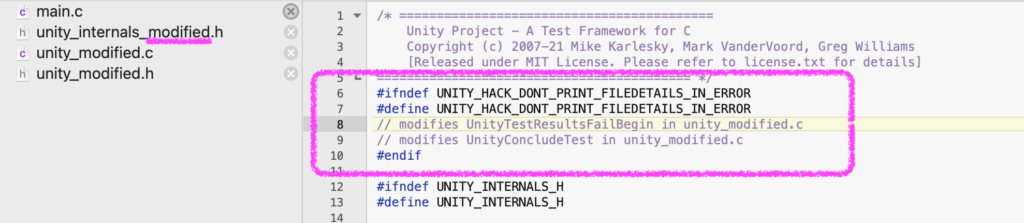

Here is how the "internals" header file was changed to include preprocessor define "tag" that allows compilation to be modified in a particular way when the tag is present:

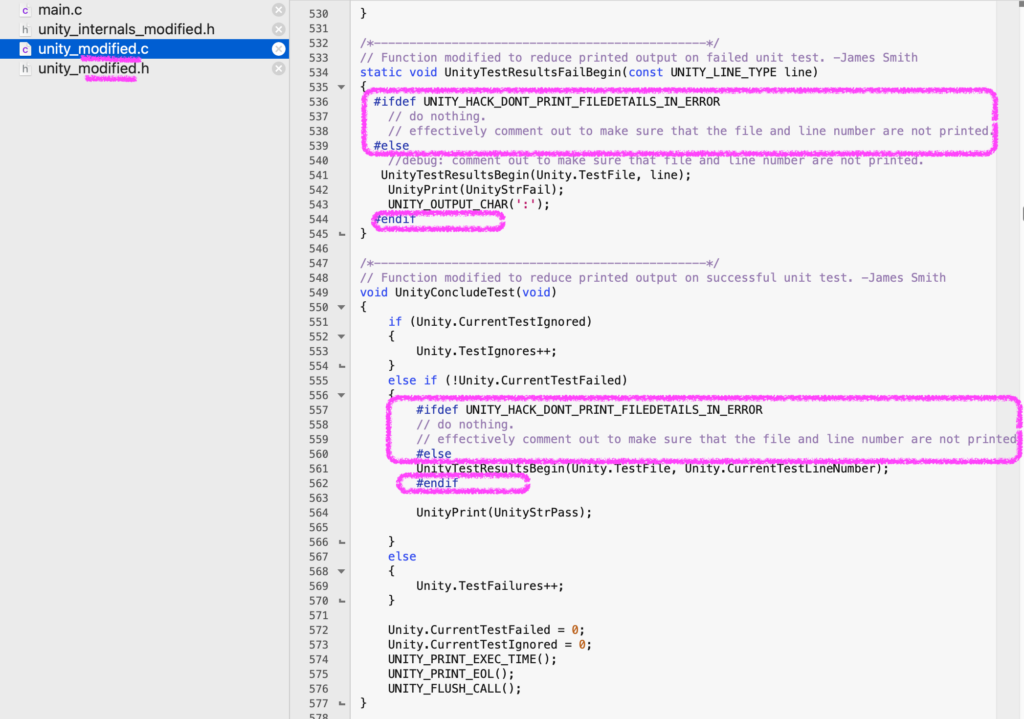

Then, the unity.c file needs to be modified (we're now calling the file unity_modified.c) so that the two functions have preprocessor conditional statements in which we print out messages when the unit test passes or fails have less information than before. The top function is for when the unit test fails and the bottom one is for when the unit test passes:

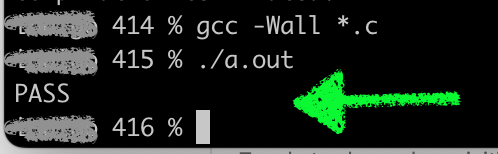

Then, a compile and execution in a terminal window show that I succeeded, both when the unit test passes:

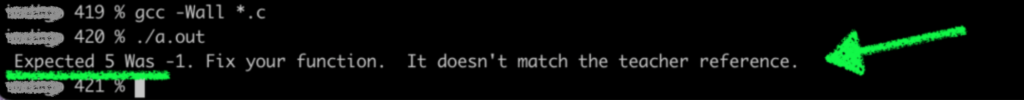

and when it fails:

Problem solved.

This is just one example of chatbots not being able to easily solve a problem. There are plenty of others. In some cases the chatbot will say that it can't answer the question. In other cases it will "hallucinate" and come up with an erroneous solution. Either way, it's important for students to understand the limitations of chatbots.

What is the impact on teaching and learing in school?

What is the impact of these chatbots on teaching and on student learning?

First, it shifts the baseline.

The appropriate baseline for what students can learn with and on has shifted dramatically as a result. We can now ask our students to work on immensely interesting and broadly scoped problems in their homework and projects.

Second, it requires us to rethink how we teach the basics and evaluate student learning on fundamental topics.

We need to look at creating challenges for our students that aren't easily and directly solved by chatbots.

The typical kinds of assessments that we used in the 1990s and onwards need to be modified. We need to understand that students have access to search and chat tools on their wrists, in their ears and in their pockets. At all times. Either we build our assessments knowing that they will use those tools or we find ways to ensure that they don't have access to those tools during assessments.

Any university manager reading this that isn't immediately working with faculty, staff and students to change final exam paradigms -- including infrastructure, security and staffing -- is missing the boat.

Third, we need to explain the limitations of these chatbots & their impacts on school and professional life.

Chatbots are changing how we teach and learn. They are also going to change how professionals use programming as part of their workflow. It's important for today's students -- and our recent grads -- to find meaningful work that will not easily be supplanted by chatbots. That means being able to understand the limitations of chatbots so that management will continue to value your presence in the workplace -- and to pay your salary!

And for my Engineering students, these chatbots are going to have a particularly interesting impact on our profession. Professional Engineers have a responsibility to the public. Chatbots don't have to worry about that. But we have to ensure that erroneous chatbot solutions don't make their way into engineering designs and products. Keep an ear out from our regulatory bodies on the topic of chatbots as there is sure to be some interesting perspectives and suggestions for how they will impact our workflows.

This isn't a new problem

The basic issue here is no different than the "Arduino problem" that Engineering and Computer Science schools have been faced with for the past two decades. The availability of generally correct open sourced libraries and source code snippets on the internet has allowed for cut-and-paste problem solving for programming and hardware projects and assignments. It's gotten easier with sites GitHub and Stack Overflow and even more so with Chatbots and tools like "code co-pilots".

Students have gotten used to being able to use search engines and now chatbots to find solutions to homework, project and exam problems. Shortcutting to the solution promotes a superficial understanding of the underyling problems because -- as have the rest of us -- have gotten used to

Shortcutting requires little insight on the part of the learner and can fool the assessor into thinking that the students are more capable that they are. The chatbots have simply amplified this problem because they are capable of synthesizing information from a multitude of sources quickly and, often, effectively.

The buck stops with you, not the chatbot

Chatbots are being used to solve problems more and more.

That said, it's clear that chatbots don't have all the answers. This blog post is one example.

However, the convenience and capability of chatbots make it obvious that they will be used. The impact they are having in schools and the impact they will have in professional contexts cannot be overstated.

For instructors: we need to come up with new approaches to teaching and assessing. Try out the chatbots to get a better understanding of where these new technologies are taking us.

For students: get used to using chatbots. But make sure that you don't lose sight of the fundamentals and don't pass up opportunities to do work without the aid of chatbots. Use the chatbots for learning, but don't let them do the learning for you.

Because when you're faced with a problem that the chatbot can't solve -- correctly or at all -- it'll be up to you to do the work!

James Andrew Smith is a Professional Engineer and Associate Professor in the Electrical Engineering and Computer Science Department of York University's Lassonde School, with degrees in Electrical and Mechanical Engineering from the University of Alberta and McGill University. Previously a program director in biomedical engineering, his research background spans robotics, locomotion, human birth and engineering education. While on sabbatical in 2018-19 with his wife and kids he lived in Strasbourg, France and he taught at the INSA Strasbourg and Hochschule Karlsruhe and wrote about his personal and professional perspectives. James is a proponent of using social media to advocate for justice, equity, diversity and inclusion as well as evidence-based applications of research in the public sphere. You can find him on Twitter. Originally from Québec City, he now lives in Toronto, Canada.